Introduction

Ask an IT architect for a “proper” design, and you’ll get the usual: Multi-AZ, auto-scaling, load balancers, and active-passive failover. It looks clean on a diagram.

When you want to validate your business idea, it’s usually the wrong priority.

You don’t need “five nines” (99.999%) of availability. You need a runway. And you need an engineering reality that matches your business stage.

Your early-stage architecture should feel uncomfortably cheap.

The Cost of “Just In Case”

Resilience is not “best practice.” It’s a premium insurance policy that multiplies your costs because you buy more than you really need. Your “policy” results in:

- higher monthly infrastructure spend

- higher operational complexity (which significantly increases your overall costs)

Let’s do the basic math:

- Load balancing: add a managed load balancer. Impact: higher cloud bill, more moving parts, higher complexity.

- High Availability (HA): run two of everything to survive a zone failure. Immediate impact: roughly 2x.

- Disaster Recovery (DR): replicate to a second region. Impact: 2x again, plus real engineering overhead (data, failover, testing, runbooks, compliance questions).

That’s how a “simple” $500/month stack becomes $2,000/month. The cloud bill is visible. The hidden load is the engineering time you lose to maintaining a system you don’t need yet.

If you’re building hardware-enabled SaaS, you have a leverage point most SaaS companies don’t: the EDGE.

The Industrial Advantage: Put Resilience Where It Belongs

In generic SaaS, a backend outage stops the business. In hardware-enabled systems (logistics trackers, factory sensors, energy monitors), devices can keep the operation alive.

A solid device management strategy makes the cloud outage manageable:

- Connectivity drops? Devices buffer data locally.

- API down? Devices retry communication with exponential backoff + jitter.

- Backend deploy goes sideways? Devices keep operating as usual until the rollback finishes.

- Bad config pushed? Devices keep operating on the last known-good config.

If physical assets stop working because your cloud blinked for 5 minutes, that’s not “an HA problem.” That’s a systems design problem at the edge.

Keep the cloud dumb, cheap, and singular. Make the fleet resilient.

The “Scale Vertical” Rule (Especially in IoT)

Reality check: in hardware operations, load is usually predictable. You know how many devices communicate with your system. You know their reporting cadence. You can prepare for the “worst case” scenario.

Before you build a distributed system, try the simpler option:

- scale up one server

- scale up one database

- optimize the ingestion path

- watch CPU/IO and move when you have evidence (not earlier)

Vertical scaling is cheaper, easier to debug, and reduces complexity.

So What Should You Do Instead?

Don’t confuse “cheap” with “sloppy.” The proper early-stage posture is: simple architecture, disciplined execution.

- backups and restore tests (not “we enabled backups”)

- infrastructure as code, so rebuild is real

- monitoring + alerting tied to operational impact

- clear SLA that matches what customers actually need today

- security defaults (least privilege, secrets handling, patching cadence)

This is operational resilience without paying the multi-region tax.

When to Pay the Premium

Buy HA/DR (High Availability/Disaster Recovery) when the economics force you to:

- when 1 hour of downtime costs more than the monthly redundancy bill

- when you have an SLA with real penalties

- when the business is mature enough that availability is a revenue (not a nice-to-have) feature.

Until then, a rare outage is a manageable risk. Running out of cash because you over-engineered your stack is not.

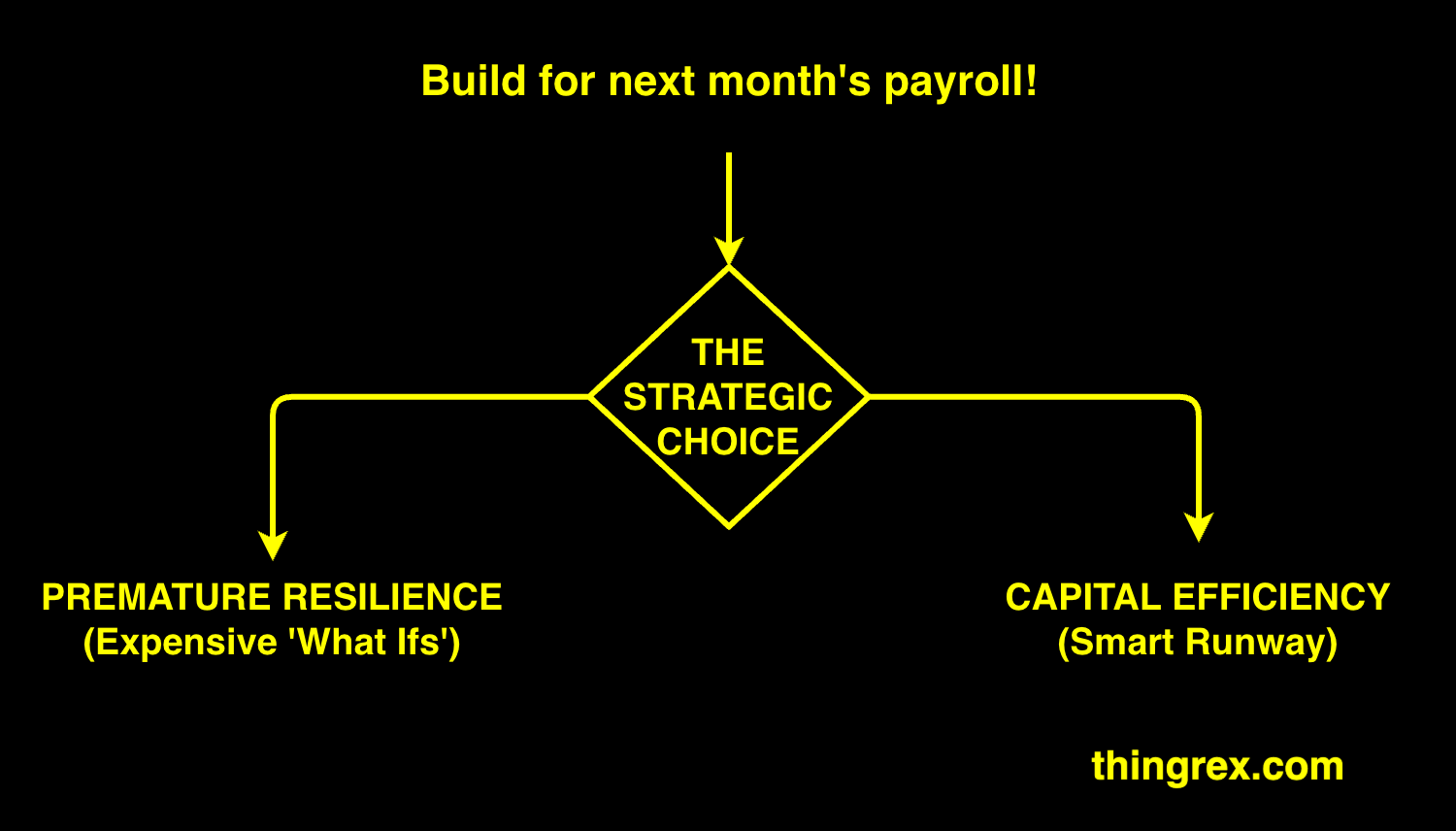

Stop building for Google scale. Build for next month’s payroll.

Contact me if your cloud bill is bleeding cash for “just in case” infrastructure; I help hardware-enabled founders align their systems with their business needs.